Achieving true trustworthiness in artificial intelligence is an ambitious goal, and the problem is far from being solved.

Towards the Fair Use of Artificial Intelligence

AI Research

Even though AI systems are widely used in both the public and private sector, the technology is often not fully understood or utilized properly. While current AI systems can, for example, recognize music or pictures well, it is fundamentally questionable whether they can predict individual behaviour - such as predicting someone’s success in a job within recruitment.

AI systems require close monitoring and adjustments to ensure that they work in a way that is beneficial for businesses, society and the planet.

For an AI system to be trustworthy, it needs to fulfil several core requirements, according to the EU HLEG (Expert Group on Artificial Intelligence):

-

Artificial intelligence technologies ought to empower people by enabling them to make knowledgeable decisions and upholding their basic rights. In addition, appropriate supervision procedures must be guaranteed; human-in-the-loop, human-on-the-loop, and human-in-command approaches can do this.

-

AI systems must be safe and robust. They have to be precise, dependable, reproducible, and safe, guaranteeing a backup plan in case something goes wrong.

-

Adequate data governance systems must be ensured, taking into account the quality and integrity of the data and assuring legitimized access to it, in addition to guaranteeing complete respect for privacy and data protection.

-

Transparency is required in data, system, and AI business models. Mechanisms for traceability can aid with this. Furthermore, the way in which AI systems and their decisions are communicated ought to be tailored to the specific stakeholder. When interacting with an AI system, humans need to be aware of the system's limitations as well as its capabilities.

-

Avoiding unfair bias is essential since it can have a number of detrimental effects, such as marginalizing disadvantaged populations and escalating discrimination and prejudice. In order to foster diversity, AI systems should be inclusive to all users, regardless of handicap, and incorporate pertinent parties at every stage of development.

-

AI systems ought to be advantageous to all people, including future generations. Therefore, it is imperative to guarantee their sustainability and environmental friendliness. Their social and societal impact should also be carefully evaluated.

-

Mechanisms should be put in place that guarantee accountability and responsibility for AI systems and their outcomes. Ample and easily accessible solutions should also be guaranteed.

Acknowledging the current imperfections of artificial intelligence and the need for intensive research to achieve those core requirements for our clients, we are involved in several national and European research consortia to establish what makes AI trustworthy and how we can achieve fairness for AI systems in all situations.

Our Impact

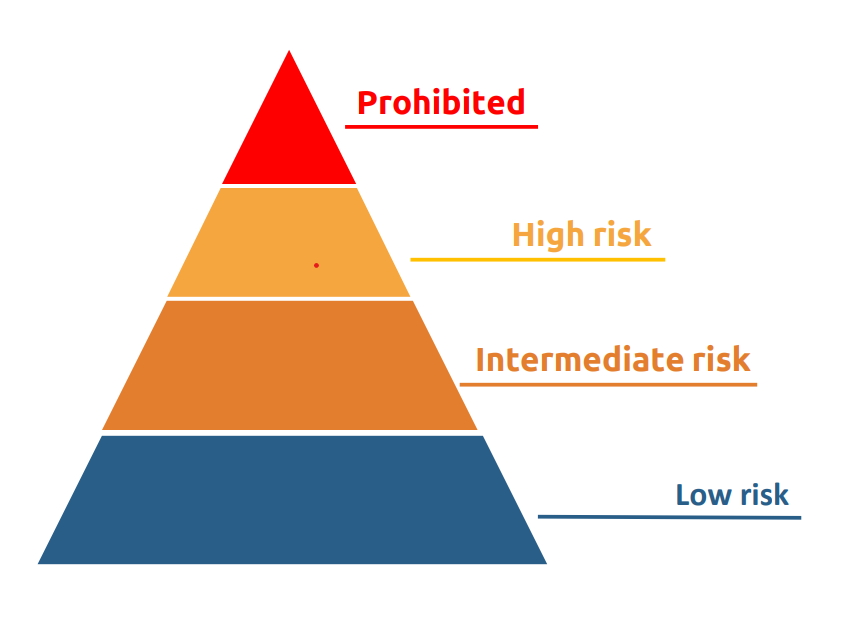

The regulatory landscape for AI technology is changing, with many new regulations already enacted, or on the horizon. Those regulations will affect many companies and organisations that use AI in the European Union.

Examples

Fairness and trustworthiness in AI systems will become of increasing importance in the coming years. Companies and organisations will have to carry out mandatory risk self-assessments when using AI systems.

Be ahead of the curve and work with us in developing and implementing trustworthy AI. With our research, we can assess and make the AI systems you use or intend to develop adhere to high quality standards.

We are happy to support and participate in new projects that can bring us closer to achieving trustworthy AI for all. Those who work with us benefit from early participation in the crucial development of improving AI systems and making them more sustainable.

What We Work on Regarding Fair AI

leiwand.ai already has a track record of providing national and European stakeholders with research and know-how that has made their work with AI technology more trustworthy, improving their knowledge on the subject for future projects as well.

We work with a wide spectrum of industries and clients who want their AI systems to be fair for people and the planet. We therefore base our missions on the issues at hand, finding solutions or educating our clients on AI development in all forms.

Digital Humanism in Complexity Science

At leiwand.ai, we believe that all AI systems that are put into operation should be trustworthy - and with the awarding of a Digital Humanism grant from the Vienna Business Agency and the Vienna Science and Technology Fund (WWTF). leiwand.ai and its consortium partner Industrie 4.0 created a roadmap to Trustworthy AI. This foresight activity aims to explore possible future scenarios of AI and their consequences on trustworthiness and subsequently derived measures.

A roadmap to the future of trustworthy AI

leiwand.ai and the Complexity Science Hub Vienna have partnered in a consortium (link) for developing a roadmap for digital humanism at the Complexity Science Hub (CSH). Digital Humanism aims to put people and the planet back at the centre of the digital transformation. For leiwand.ai it is especially important to bring its knowledge about trustworthiness in AI to the table and to emphasize algorithmic fairness and justice throughout the project.

Algorithmic Contracts

From formation to performance, the use of algorithmic contracts is growing. The European Law Institute initiated a project on algorithmic decision-making systems in the various stages of the contract life cycle, in order to assess and further develop the current level of protection of existing EU law affords consumers and other interested parties. Leiwand.ai co-founder and CTO Rania's role as project team member is to provide AI expertise, assist in the development of scenarios for the use of algorithmic contracts, and help assess the feasibility and effectiveness of proposed legal safeguards from a technical perspective.